There’s been a recent rush of excitement on LinkedIn about the vividness of the latest animations generated by image transformers.

In particular, there’s a film clip with vividly realistic robots, in various states of terrible helplessness, bemoaning the prompts that led to their dire situations. I think it’s just an amusing recursive take on the Fourth Wall, or a variation on M.C. Escher’s famous Drawing Hands that draw themselves into existence.

But in many of the LinkedIn comments, people are seeing signs of robots becoming self-aware.

Anthropomorphism is bad enough with Large Language Models, but with image transformers, it might get completely out of hand—like the way very early cinema goers ran screaming from the theatre, convinced a locomotive was about to burst out of the screen and plough into the crowd. This is a new and deeper level of the Deep Fake problem. Even when smart people know the moving images are software generated, they tend to think the animations are real.

I’m calling for “cognitive calm”. Let’s try to unsuspend disbelief.

I don’t mean to underestimate the importance of image transformers and the dizzying rate of progress. I just want people to remember that with all AI today, “it’s just a model”.

As I understand them, image transformers are like LLMs; they are both pattern extrapolation machines.

LLMs are sometimes poo-poohed for “merely” completing sentences. That’s a bit of a simplification but the jibe does capture the essential mindlessness of neural network AI models.

The truth is that the output of any LLM-based generator is an evidence-based prediction of how a passage of text is going to continue, in a way that is consistent with the prompt. Today’s trained LLMs reflect trillions of pieces of text sampled from the wild, from which are inferred deep statistical patterns, sufficient to generate sensible strings of sentences. The LLMs not only follow the rules of syntax and grammar but also follow stylistic conventions and other patterns found in the training text. Hence the name language model — it’s an empirical mechanical representation of how real-world text is constructed.

So, if sufficient writers happen to have described different aspects of the behaviour of blue coloured birds in winter, the LLM will be able to string together a fair number of sentences that are consistent with the whole of the body of work it has ingested. Throw in some randomness so the writing isn’t overly repetitive, some feedback loops and unwritten prompts about beginnings, middles and ends, and the result looks like a polished story which embodies the wealth of written experience on the subject.

Just as language transformers make predictions of sensible text passages based on patterns extracted from real life writing, vision transformers predict how things should probably look in brand new images, based on patterns in real world pictures.

AI is certainly amazing at generating reasonable images of complex things and interactions. Yet the properties and relationships of things are not perceived or deduced by these models or understood in any way. The exercise is not to model causality but only appearances.

None of the generative pretrained transformers (GPTs) simulate anything. There is no abstraction of real-world phenomena in mathematical equations; rather, there is merely a probabilistic calculation what things should look or sound like.

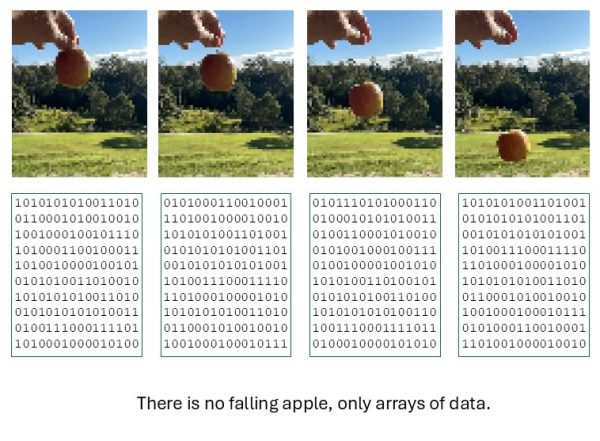

Consider what’s going on when an image model is trained on an apple falling from a tree (or rather, on billions of clips of similar objects moving under similar circumstances). Videos are digitised and coded to create arrays of data, which are fed into the neural net and crunched together with other arrays. Patterns in the data result in the iterative refining of a net’s constituent interconnections and weights, which collectively come to instantiate what’s been “learned” from the training. When similar patterns recur in the inputs, reinforcement results and certain weights are boosted.

It’s tempting to think that such an AI has learned the laws of physics through observation, but all it’s doing is establishing the statistics of how series of arrays of data tend to develop in particular ways. And so, based on its training, the image model can be prompted to calculate a sequence of arrays of reasonable data values to follow from some starting condition.

The AI is unaware that the array data structures were earlier designed by engineers to codify the intensity and wavelength of light sampled at different points by a two-dimensional camera sensor, and furthermore, that the sequencing of the arrays correspond to constant time intervals. The AI doesn’t know that cameras are themselves models of the human retina. It doesn’t have to know that, because it’s not watching a video—it’s only crunching data. An altogether different agent later on gets to experience the value arrays as periods of vision in which an apple is falling.

The image transformer has no more understanding of physics than an LLM has of the human condition. Sure, ChatGPT might be able compose a vivid story with compelling characters we identity with, to the extent it might even make us cry, but only after ingesting billions of arrangements of adjectives, nouns and verbs which were earlier designed by artists to evoke the emotional response.

If we feed an image model enough video with fruit and objects in motion, then we can prompt it to compute a string of arrays containing patterns that happen to correspond to what a falling apple looks like. Likewise, if a model is trained on millions of hours of humans going about their business, it will be able to generate patterns of pixels which to us look like other people talking and laughing, sharing a joke, or even doing something as complex as flirting.

The stuff of any neural network, whether it’s designed for language or vision, is nothing but interconnects and weights. It happens that some configurations of interconnects and weights can be mapped by humans onto real world features—patterns of lights and sounds—with which we identify. But the model is only learning past patterns and extrapolating new ones.

There are no rules in the worlds which are modelled by these AIs—only statistics. We’ve probably all seen AI-generated images where hands have shifting numbers of fingers and people walk through walls. I appreciate the models are getting better but all the same, these sorts of errors illuminate exactly what it is that’s being modelled—and it was never understanding.

When an AI model for example predicts how a child will respond to a parent in some situation, verbally or visually, it has computed a lot of numbers which correspond to images and/or words which in turn seem sensible to us humans. Strictly speaking, it’s only a coincidence when the AI model comes up with something that’s even relatable let alone accurate.

Note: The images in this blog post are for illustration only. None were generated by AI; they’re the figments of a natural intelligence.