This blog is another companion to my on-demand speech at FIDO #Authenticate2022, happening this week in Seattle.

In my speech I talk about the quasi-standard basket of FIDO capabilities that are adjacent to the most important security features of digital platforms today, enabling not only seamless user authentication, but also the signing of all important data at every point in the data supply chain. We should treat data as a resource, and that means being sure about all the various properties that make data valuable.

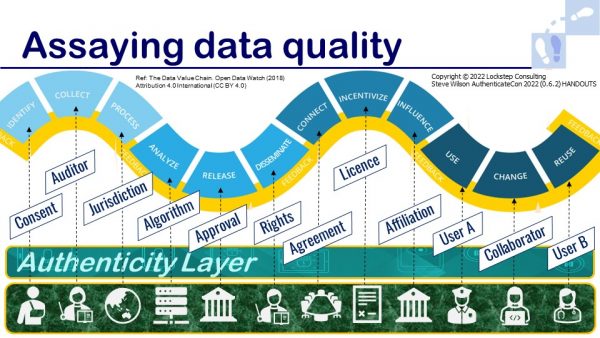

The diagram below, adapted from my slides, builds on the Data Supply Chain described by Open Data Watch in 2018. I have underpinned the data supply chain with an authenticity layer and added labels for the types of property that we can specify and bind to data using FIDO-like technologies.

My suggestion for an authenticity layer (first described at #Authenticate2020) is a reaction to the mythology that the internet is missing an “identity layer”. I feel that has been a misconception that romanticises identity and misframes the problems we have had for decades deploying reusable identity.

Data has obviously become big business, right or wrong. Data supply chains (aka information value chains) have been forming for decades, as described by Open Data Watch and many others.

So it’s a pretty standard view now that information has a lifecycle. It starts with collection. Then data is disseminated and value-added through many potential steps. The diagram shows a superset of potential data processing stages, not all of which apply to every application.

Now let’s envision data is a true utility. We all know the metaphor can break down, like all metaphors, but it’s undeniable that data is used as if it is some kind of resource or raw material, and as such, there are properties or attributes that make data valuable.

So as data moves from one process to the next, what questions should we be asking about it? If data is identifiable and personal, has the processor got consent? What was the process for collection? If standards apply, as they do in medical research, then we may need an auditor, and their audit report might be attached to the data.

As data flows internationally, it’s becoming more important to know where exactly it was processed. So jurisdiction is an attribute of interest. Bias is a huge issue, so we often want to know what algorithms were used along the way. As data gets released, we may need to have that approved by accountable individuals. And what usage rights attach to data? We tend to connect data across applications according to business rules, and people data processors may need to need to agree to usage licence.

These questions typify a way to frame data as a utility, and to start to think about how we can assay it; that is, hallmark data through its lifecycles, so that its properties are visible and verifiable. The question of attribution arises. In a spin on some old conventional wisdom, it’s not just what you know, but how do you know.

The verification of data — including its origin, and the way it is presented from one processor to the next — can follow similar patterns as the verification of identity and credentials. This framing shows that authentication technology is so much bigger than identity. We should be talking about the quality of all data, and the authenticity of everything that we use online.